AI Data Engineer

Agentic Data Agents for your Databricks Workspace

Autonomously build and manage data pipelines for your most complex engineering tasks in Databricks.

Built for complexity.

Designed for Autonomy.

“Data-driven innovation is essential to enterprise success. AI-driven solutions like Osmos supercharge data engineers and empower business analysts to tackle complex data challenges. By leveraging the full power of the Databricks platform, Osmos helps organizations unlock the true potential of both their data and their people. Together, Osmos and Databricks are helping organizations transform data into a catalyst for innovation.”

— Bryan Smith Head of Industry Solutions, Consumer Industries, Databricks

Where Traditional ETL Taps Out,

Osmos Steps In

How the AI Data Engineer goes beyond traditional ETL tools

Autonomous by Design

Tell us what you want,leave the engineering to your AI Data Engineer.

Built to Adapt

Handles complex data, massive datasets, and intricate transformations with ease.

Code you can trust

Autonomously designs, builds, and executes test plans, ensuring reliable, production-ready code.

Built for Complexity. Designed for Autonomy

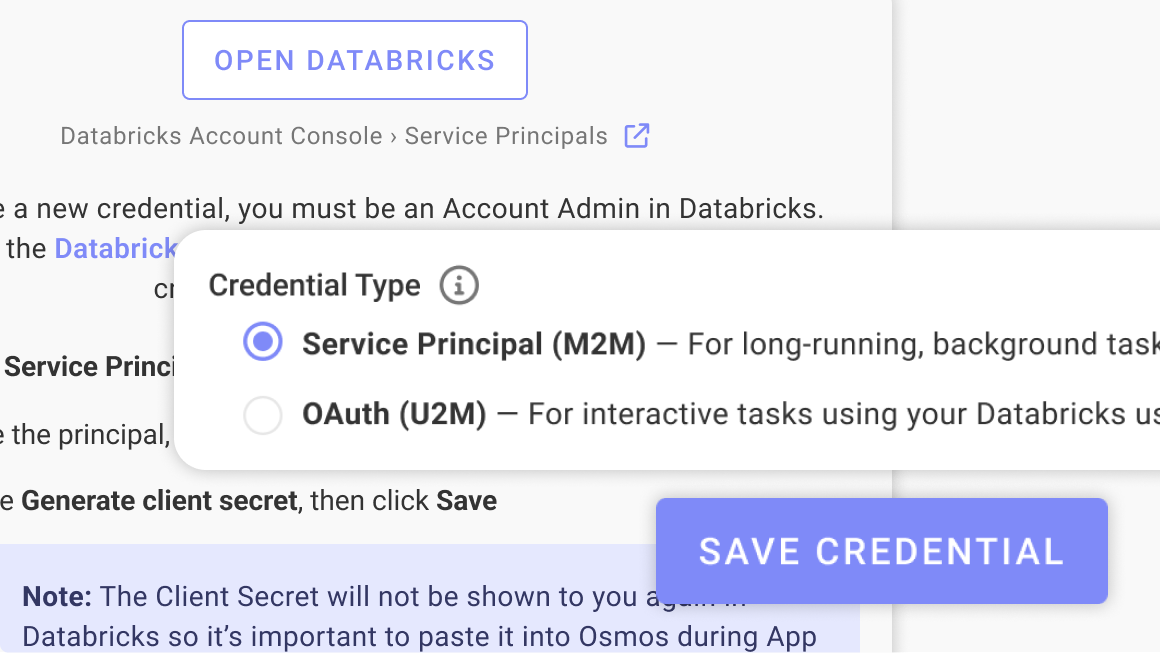

Set up Databricks credentials for Osmos

Give Osmos Data Engineer access your Databricks instance safely by respecting catalog and workspace access defined by your Databricks admin.

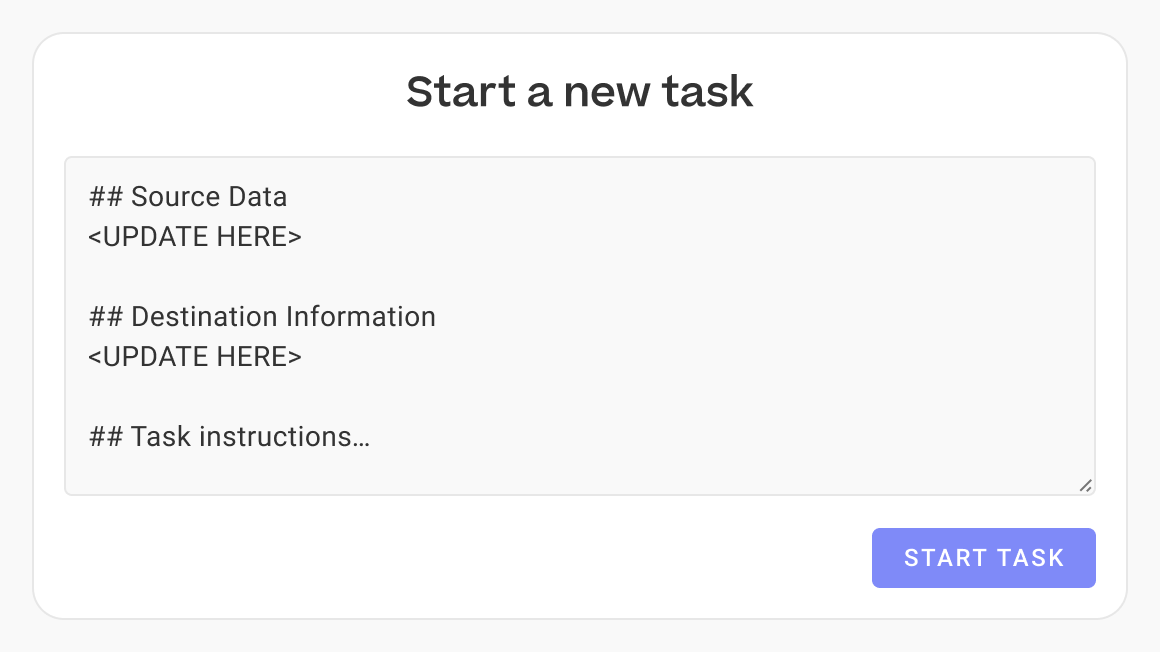

Configure yourOsmos AI Data Engineer

Point the AI Engineer to your code and give it tasks.

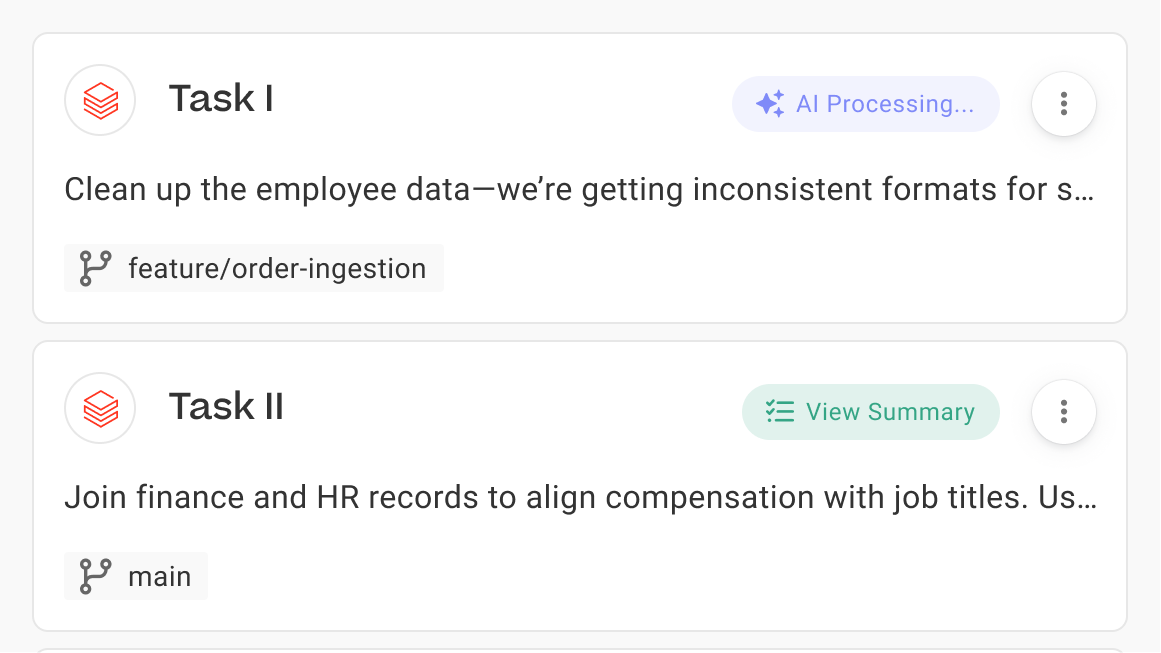

Grab a cup of coffee while your task completes

The AI Engineer will make copies of the code and schema in the safety of your environment. It will then make the necessary changes, update code, update its copy of tables and exhaustively validate its own work.

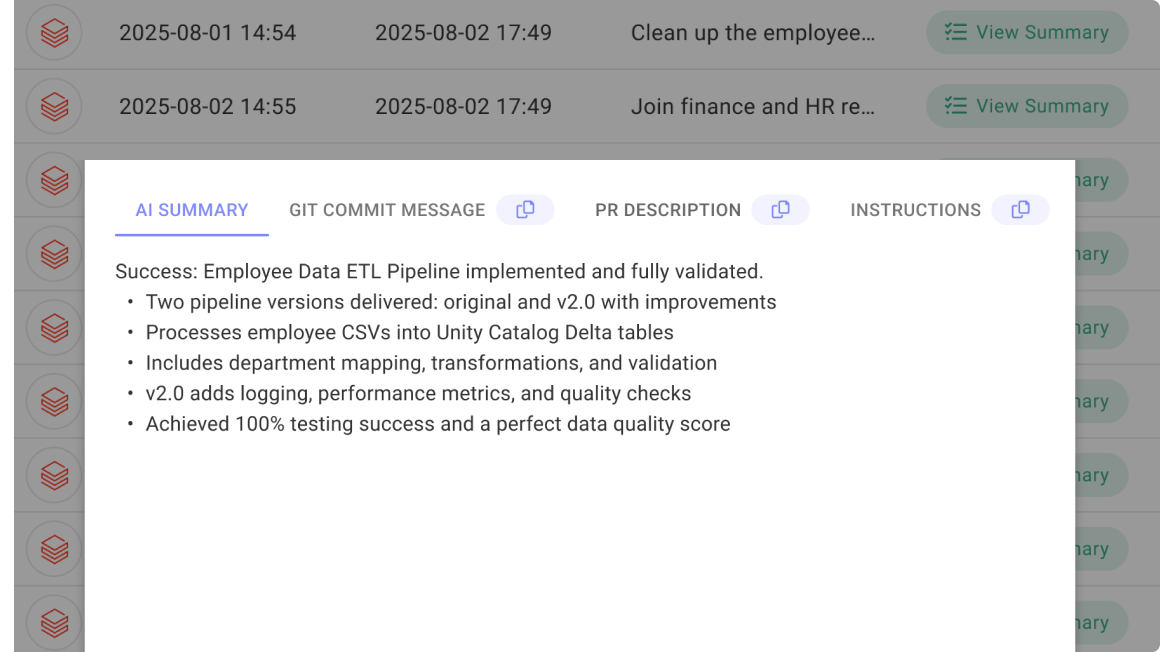

Review and approve

Review the AI Engineer's work and merge in the changes.

AI Agents for Microsoft Fabric now available.

.svg)